76 KiB

Journals: Learning how to sysadmin

- Goals and expectations

- Resources, preparations, and execution

- Habits and todos

- 2022-11-10

- 2022-11-11

- 2022-11-12

- 2022-11-13

- 2022-11-16

- 2022-11-22

- 2022-11-23

- 2022-11-24

- 2022-11-26

- 2022-11-27

- 2022-11-28

- 2022-12-01

- 2022-12-02

- 2022-12-03

- 2022-12-04

- 2022-12-05 - 2022-12-07

- 2022-12-08

- 2022-12-10

- 2022-12-12

- 2022-12-15

- 2022-12-18

- 2023-01-01

- 2023-01-04

- 2023-01-06

- 2023-01-11

- 2023-01-12

- 2023-01-13

- 2023-01-14

- 2023-01-15

- 2023-01-16

- 2023-01-17

- 2023-01-18

- 2023-01-19

- 2023-01-21

Goals and expectations

While I've been managing a Linux desktop installation, it isn't the same as adminstering systems in a server setting. So I want to learn by properly managing a server in some cloud platform (e.g., roam:Amazon Web Services, roam:Google Cloud Platform, roam:Microsoft Azure). I would want to manage one (maybe with a roam:Debian Linux installation) in at least a quarter of a year and get to a level of getting a job out of it.

To be able to know the lay of the land, I'm able to find job listings from various sources 1. The average list of skillset required seems to lean on the following:

-

Knowledge in Linux components

- roam:nginx

- roam:Shell scripting

-

Development deployment tools, mostly related to containers such as…

- Kubernetes

- roam:Docker (or roam:Podman)

-

Knowledge in managing Windows servers

- Active Directory

- LDAP user and group administration

- roam:Powershell

This means I would have to start at 2022-11-10 and reach that level at least in 2023-02-10.

Resources, preparations, and execution

The simplest way to get started is to maintain a server for a variety of purposes. You could maintain a server for…

- A password manager for your private accounts (e.g., Bitwarden or Vaultwarden).

- A game server for your friends (e.g., Minecraft).

- A code forge to store your own code (e.g., GitLab, Gitea).

- An audio server that can be used to play anywhere on your devices (e.g., Funkwhale, Navidrome).

- A content management system to serve your organization (e.g., Wordpress, Grav).

- A web server for your web applications.

- A chat server for your community (e.g., XMPP server, Matrix server).

- A social media instance for your community (e.g., Mastodon, Pleroma, Misskey, Lemmy).

In order to get started, you have to choose a virtual private server (VPS) host. There are several of them to get started including the big 3 web services (roam:AWS, roam:GKE, and roam:Microsoft Azure), Digital Ocean, Hetzner Cloud, Linode, and vultr. Each of them has a starting tier (with the big 3 has a free tier as long as you have a valid credit card information) that is under $5 dollars in average.

Alongside the list of services that you might be interested in, there are a lot of applications and services to be interested.

- You could look into the services offered by Yunohost, a Linux distribution that is primarily targeted to self-hosting services.

- There's a lot of services hosted at awesome-selfhosted/awesome-selfhosted from GitHub.

- Any services from roam:NixOS modules.

Take note, each daily entry is made progress with 4 pomodoro sessions (2 hours) a day. Then, the writing is done after, trying to recollect my experience as much detail as possible. Anything more should explicitly be listed in the entry.

Habits and todos

DONE Learn kubernetes and Google Kubernetes Engine

SCHEDULED: <2022-11-10 Thu>

DONE Deploy a Vaultwarden instance

DONE Deploy an Archivebox service

DONE Deploy an RSS feed service

DONE Deploy a cluster

TODO Manage a Linux server for at least 3 months

<2022-11-10 Thu>–<2023-02-10 Fri>

TODO Manage a Windows server for at least 3 months

<2022-12-10 Sat>–<2023-03-10 Fri>

TODO Deploy a NixOS image in virtual private server host

DONE Deploy with deploy-rs

DONE Deploy in Google Cloud Platform Compute Engine

HOLD Deploy in Microsoft Azure Linux VM

TODO Deploy in Hetzner Cloud

TODO Self-hosted services

DONE Vaultwarden

DONE Gitea

HOLD Sourcehut

TODO OpenLDAP

TODO MinIO

TODO OpenVPN

2022-11-10

Started to journal my journey for system adminstering up to semi-professional standards. For now, I'm scouting my options though I previously tried with Google Cloud Platform and deploying a Kubernetes cluster on it. I might manage a Linux virtual machine right away using Compute Engine from Google Cloud Platform. I'm very tempted to make it with a NixOS image as similarly laid out in this blog post however I'm going with Debian as it is closer to traditional setup. I may even consider something like Red Hat Linux or Rocky Linux.

2022-11-11

Retested the installation for cert-manager with their page with Google Kubernetes Engine and I still didn't able to successfully complete using only a raw IP address. Opportunity to buy a domain for myself and follow it the next time.

I'm very very tempted to make a NixOS deployment image as I've already seen what I can do with it but for now, I'll stick with the traditional tools. However… managing both is an option. :)

2022-11-12

For now, I've been just managing a Debian virtual machine and successfully launched a publicly-accessible web server. Mostly involves enabling the service for the web server and configuring the firewall. It cannot be accessed easily since the instance's external IP is ephemeral. As for letting HTTPS access, there is no such thing since signing certificates is only done with domains and I'm only accessing the server with a bare IP address.

This is the first time I have to worry about the lower-level things I haven't touched with the usual processes such as deploying websites and all.

Anyways in case you're curious, why Debian?

- It is stable. Though, I have options such as deploying images with NixOS.

- Support and community is large. It is a battle-tested distribution with a large package set and lots of resources have been written for this system.

- Consequently, it has extensive documentation not only for beginners but also for various aspects like its releases. While its community wiki is not as thoroughly documented as Arch Wiki, it often contains enough information to get you by when managing a Debian server.

2022-11-13

I haven't done anything much in this day. On the flip side, I'm overworrying about the price considering I'm in free trial and Google will only charge if I opt-in to activate the full account. It turns out it isn't much of a worry if I leave it alone. Having an ephemeral external IP address and being so low-value might have something to do with it.

For now, I'm going to plan to create a simple wiki server with the traditional LAMP stack. What is it going to contain? Simply my findings and mainly for configuring Mediawiki as I'm assuming the role of a sysadmin.

2022-11-16

Unfortunately, progress has stalled for now since I don't have a usable bank account for now. Once it is available, progress should be quick with the availability of a domain name for me to mess around with.

A domain name is surprisingly affordable. Just the services attached to it is where most of the expenses come from such as the domain email hosting and whatnot. For the domain registrar, I picked Porkbun since it has a lot of sales and it is generally cheaper than something like Google Domains.

2022-11-22

Setup my own blog with the domain. It was slightly confusing at this is my first time diving into the level of server and domain management. First time encountered concepts like the DNS, CDNs, and managing DNS records, all of which I've learned from Cloudflare documentation of all things.

With the DNS management in place, I mostly learned how it interacts with the servers and makes it discoverable for other servers such as the effect of DNS caching which can take under a day to take effect.

The main problem I have encountered is redirecting my blog in https://foo-dogsquared.github.io into my custom domain. However, I soon found out that all of the pages under my GitHub Pages domain is affected, making all of my project pages part of the domain. The "easy" solution is just deploying my blog into a separate service and deploy the main GitHub Pages with a redirection page. It is also the time I haven't used Netlify in a long while.

The chain of problem never ends as now I would like to deploy my blog with Netlify easily. Unfortunately, Netlify doesn't have an easy way to install and bootstrap an installation of Nix package manager unlike using with GitHub Actions. A solution for this would be using GitHub Actions to build and Netlify to deploy which fortunately someone has already created a solution.

Most of the problems I have are from misunderstanding and misconceptions of how DNS and server management works. One of the prominent misconceptions I have is the DNS management is completely on the server, neglecting how clients can also affect the browsing experience. This unfortunately took me two hours to figure out and completely missing the real problem. Whoops… There are some still misunderstanding with the DNS though. I'll have to go with the basics.

I also thought GitHub Pages can be separated from domain per project page. So that's another concept I didn't easily able to wrap around my head.

Despite the fumbling around, I would say not bad. Now, I'm very very motivated to go self-hosting mode as I continue to host my personal notes (that I continue to neglect updating). I would like to self-host a Vaultwarden and Archivebox instance the next day.

2022-11-23

Another day, another time for DNS misadventures and misunderstanding. This time, most of the problems come from the misunderstanding of how hosting works which is far off from my recent idea of a hosting provider where each part of different domains can be specified to make up the frontend of your website. It turns out this is not the case.

I was able to deploy my blog with Netlify and set it to my domain.

Now, foodogsquared.one is open for the world!

I still haven't solved the issue of missing icons from the deployment but I'm very confident it is an DNS issue seeing as the "missing files" can be viewed, just viewed with the inappropriate headers that cause them to be blocked.

Not to mention, there is missing redirections for the old site which makes it inconvenient.

The only hope is nobody visits my site as I'm already dormant for the most services this year.

The thing only took about an hour where most of the time are spent in questioning and swatting the cache and tumbling over Porkbun's interface as I repeatedly reset and didn't realize my DNS records are kept being reset every time I want to point the domain to my GitHub Pages instance.

In any case, I'm just to going to delay fixing the issues from the blog site because I want to self-host some services. ;p

In this case, I want different services as part of one domain (e.g., my Vaultwarden and Archivebox instance under foodogsquared.one).

It turns out that while Netlify allows some form of domain management, it simply isn't flexible enough especially since the services I put together are more likely to come from different sources.

I mean, simply deploying my blog already requires Netlify for it, what more for self-hosted services that Netlify cannot simply do?

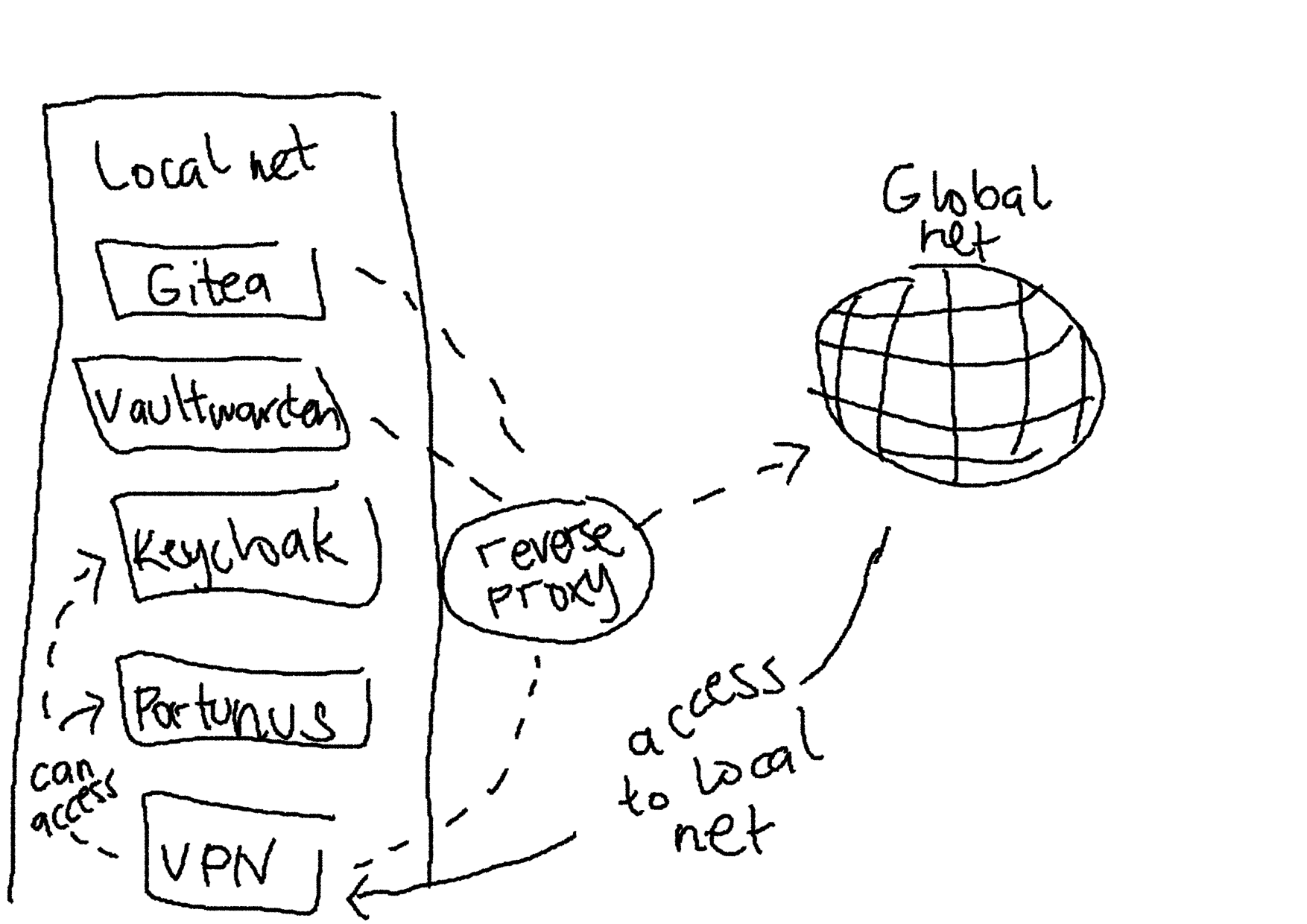

To make it possible, I have to manage a proxy server that lets me sew in those services altogether under one domain.

That is, I want to access my Vaultwarden instance in vault.foodogsquared.one, I want my feeds to be accessible in feeds.foodogsquared.one, I want to self-host my code in forge.foodogsquared.one among other examples.

Luckily for me, several of them exist such as Nginx, Caddy, and the good 'ol Apache HTTP server all of which listed software have capabilities beyond a simple server.

However, I chose Nginx seeing as it is the popular tool in hand and also because a lot of job listings that list knowledge for nginx as part of their wishlists.

Currently, I gave in to the temptation to configure all of the servers with NixOS. Alongside the fact that I already have enough for imperatively managing them servers, there are additional three main factors to this decision:

- The declarative configuration.

- A framework for generating custom images (nixos-generators) that is built on top of nixpkgs.

- The fact I already have an existing configuration that can serve as a framework to easily instantiate individual nodes.

The hardest part is creating my first image which is going to be deployed in Google Cloud Platform. The second hardest part is managing my Google Cloud Platform account as the mountainous amount of things I have to keep in mind whenever I'm staring at the dashboard of several cloud providers. The third hardest part is the amount of prerequisites before I even start doing one thing which is already a thing that the second hardest part is giving me. Unfortunate…

On the other hand, my NixOS configuration is slowly turning to be a nice monorepo for deploying everything I want. It is surprisingly easier to manage them but the part that's giving me the hardest part is the deployment. As for private files and deployments, this is easy to manage with Git worktrees which is somewhat tedious to make sure my public and private branches to sync.

2022-11-24

The configuration for Vaultwarden are in place in my first NixOS-powered deployment but most of the problems are from the lack of understanding the networking infrastructure. Fortunately for me, there is the Debian Handbook with details on each facets on the infrastructure. It is specifically aimed for Debian systems but it is good enough if you're familiar with the interface (which is just a command-line shell such as Bash).

Before that, the trouble comes from setting up a mailer which is troublesome if you only have a GMail account. However, I'm also considering to move my email provider from GMail to something else. Candidates include Fastmail, Zoho Mail, and mailbox.org, all of which has a paid plan (and also a long trial period of at least 2 weeks). In the end, I decided to not use mailing services altogether for my self-hosted services for the time being.

As for self-hosting my code, I did initially consider Sourcehut since I'm largely interested in how much resources it needs to host it. However, that didn't work out as there seems to be a lot of maintenance required for my current needs which is simple right now. I still heavily consider it for future endeavors though especially with its comprehensive documentation and integration of services is just nice to have. Not to mention, Sourcehut is still in alpha which indicates the maintainers still have plans for it. Its primary maintainer especially considers Sourcehut to be easier to self-host so the plan of self-hosting Sourcehut is not entirely thrown away.

In the end, I decided to use Gitea considering there is already a NixOS module for it (at least in version 22.11) and implementing a new way to communicate between forges with ForgeFed. This means collaboration between different instances is very much possible and I'm in support for them.

Compared to Sourcehut, Gitea is simpler to initialize which I was able to quickly start an instance. Most of the time came from viewing the configuration options and testing the instance.

2022-11-26

The deployment failed because I forgot secrets management is a thing. Each infrastructure-as-a-service apparently have their own thing such as Google Cloud Platform KMS, Microsoft Azure Key Vault, Amazon Web Services KMS, and Hashicorp Vault. It's a good thing I'm using sops for this.

It's a short time from the previous days but it should go back to normal with the time to journal this abomination. Around this time I also signed up for Microsoft Azure free tier subscription for trying to manage a Windows server this time around. It should be simpler to start since I have absolutely no idea how to provision a thing but compared to my knowledge for Linux-based systems, it is non-existent. So most of the time spent will be learning all of the concepts from absolute zero experience. Should be fun…

I've also decided to full gung-ho on deploying Linux-based systems with NixOS. I've deleted all of the non-NixOS Linux-based systems in my fleet and started generating a bunch of NixOS GCE images. Should be doubly fun…

2022-11-27

For now, I've gone back to managing a deployment with Linux-based systems and try to properly manage this time around.

For a start, I decided to manage the static websites separately from the server since Netlify apparently does not bode well to proxies. At the very least, those websites can now go at their own pace instead of deploying them altogether.

Second, most of the services are misconfigured. Classic… Most of the domains and settings are not properly configured which means I have to review the documentation for the nth time. It's not exactly a chore especially that this is my first time managing all these things. Not to mention, NixOS surely does some things differently sometimes which does not go well with me especially that I rely on resources that are mostly written with the mainstream distributions in mind (i.e., Debian, Ubuntu).

Finally, I'm now going to add one more component into my server which is PostgreSQL. All of the services I've used so far can be configured to use SQLite which makes things easier but SQLite is mainly made with the filesystem in mind unlike PostgreSQL which is primarily made with network services. Fortunately for me, its documentation is easy to follow.

At least for tomorrow, I plan to manage one more component into the mix with an LDAP server for user and group management which has a lot of presence in job listings that I've seen. Fortunately for me, there is an OpenLDAP service module already available in NixOS. I just have to be careful in chewing in managing this seemingly simple server.

2022-11-28

Welp, most of the configurations of the services should be fixed but the last thing that remains is proper deployment with the secrets. While I could do that by simply transferring the private key into the virtual machine, it just misses the point of using a key management system which GCP already has. Pretty much, I'm missing out on it if I didn't use it so I have to use it. :)

From what I can understand, with sops, you have to set the proper credentials to be able to decrypt it. That's fine for local development environment but it isn't nice for deployed systems. One of the ways to properly set it is by using a service account with the proper permissions which in this case for encrypting and decrypting GCP KMS keys.

So I created a user-managed service account to be used for the server, set the proper permissions, and make the user-managed account impersonate as the default service account because I don't want to manually manage that. Be sure to read up more on how to properly manage service accounts.

The reason why I laid it all down in this writing is because the documentation of Google Cloud Platform is surprisingly nice to use… sometimes.

The way they show different ways to accomplish a task with different tools (e.g., Console, gcloud) is a nice touch.

However, the amount of looping links makes it easy to get overwhelmed.

Am I the only who just repeatedly visit between different pages just to get the idea from a single page?

I understand the reasoning as a knowledge base that caters both to new and experienced users but it is something that I experienced.

I feel like the process of simply doing those steps previously mentioned should take way shorter time than it should be. Most of the time is spent in staring at those pages, trying to see if I'm following it right. This is where I feel like I should've really started with Qwiklabs which I didn't is a thing when I started. Welp…

2022-12-01

Here we go, start of December. Only two months to go before the deadline to become halfway to professional-level (or at least getting paid).

I haven't done anything from the last two days so there's no entry for it.

Still having some problems, mainly from PostgreSQL service this time. I'll use this opportunity to experiment debugging and maintaining services with PostgreSQL. Thankfully, its documentation is very comprehensive especially that it has a dedicated chapter for server management. I'm only starting to wrap around my head with the concepts of a database and its management.

The errors from the database service are more likely from the lack of proper privileges. From the Vaultwarden service, the new error this time looks like this.

Dec 01 01:41:03 localhost vaultwarden[762]: [2022-12-01 01:41:03.533][vaultwarden::util][WARN] Can't connect to database, retrying: DieselMig.

Dec 01 01:41:03 localhost vaultwarden[762]: [CAUSE] QueryError(

Dec 01 01:41:03 localhost vaultwarden[762]: DatabaseError(

Dec 01 01:41:03 localhost vaultwarden[762]: __Unknown,

Dec 01 01:41:03 localhost vaultwarden[762]: "permission denied for schema public",

Dec 01 01:41:03 localhost vaultwarden[762]: ),

Dec 01 01:41:03 localhost vaultwarden[762]: )The error is a bit intuitive with the intuition being a permission error with the 'public' schema. I've simply resolved the error by adding the permissions from the NixOS config like the following snippet.

{

services.postgresql = {

enable = true;

ensureDatabases = [ vaultwardenDbName ];

ensureUsers = [

{

name = vaultwardenUserName;

ensurePermissions = {

"DATABASE ${vaultwardenUserName}" = "ALL PRIVILEGES";

"ALL TABLES IN SCHEMA public" = "ALL PRIVILEGES";

};

}

];

};

}As an additional fact, I've quickly come across from the documentation that 'public' schema is the fallback schema for databases without names. That's something to keep in mind in the future.

But anyways, here's a light writing on the summarized version of my understanding of the database starting with its authorization process, the part where I'm spending the most time on understanding it.

Being accessible through different ways, widely available to other users, and globally contains various application data, the PostgreSQL service has ways to make sure access to the database is only done by trusted users. This is where authorization comes in similar to POSIX-based systems when authorizing access to various services.

Inside of the database, various services (which serves as clients) want to access their data which the database contains a variety of them. In order to safely access them without much problems, PostgreSQL plants some ways to verify its client. There are different ways PostgreSQL can authorize access to different users.

- Tried-and-true password authentication for the user it tries to access as.

- LDAP authentication.

- Another way is simply leaving a map of connections and their trusted users in a file (e.g.,

pg_hba.conf).- Accessing the database as one of the user of the system with the same name as the user of the database. This makes sense: it is more likely a dedicated user specifically created for a certain service alongside a database for that service. This authorization is referred to as an ident authorization. Several examples include hosted services with dedicated setup (e.g., user and user group, database) as they're logically mapped from the operating system and its different components.

As for the plan to maintain an LDAP server and user management with it, I'll start around this week hopefully. For now, the focus is debugging and maintaining a server. Mainly, by SSHing into the server and getting used to the maintenance tools with systemd.

There are also some things I've learned such as:

- Creating a new unit file easily with

systemctl edit --full --force $UNITand it will just place it in one of the unit paths. - Easily viewing how much journal files took space with

journalctl --disk-usagewhich also supports it at systemd at user-level with--userflag. - Flushing all ephemeral journal files from

/runto a persistent storage withjournalctl --flush. - Log rotation with

journalctl --rotate. - Ports lower than 1024 is a privileged port and normal users cannot use it. 2

I'll get around to using a traditional Linux distro (Debian, again). While server management with NixOS is nice, I think getting used to the traditional environment nets more credibility. Though, it is getting easier to map concepts I'm getting from NixOS to the traditional setup with time. Especially that most of the things from NixOS are setting up services which could be done in any Linux environment anyways.

While I'm at it, I'm starting to look into backup services. I'm already using Borgbase with only the free 10 GB but it quickly ran out. For now, I'm looking for a cheaper option if there's any.

2022-12-02

Hey, the Sendgrid account application has been approved. Well, that's one more service component to enable.

There's also one very stupid mistake I didn't realize I'm making at this point from the very beginning: I keep hitting the duplicate certification limit and I keep forgetting to backup the certificates. I KEEP DOING THIS FOR THE PRODUCTION DEPLOYMENT, WHAT THE HELL! I always thought the production only makes the same error as the development deployment. Welp, I'll just wait for a week then everything should be fine and dandy for the production. I'll just use test environments with domains with test subdomains on them while I'm at it. Or I could just generate a new request with a different "exact set" of domains as already mentioned from the Workarounds section from the aforementioned page.

2022-12-03

Today's theme for misconfigurations are permissions. Permission error for inaccessibility for PostgreSQL schemas for a certain user, permission error because the users does not have the permission to access the files, et cetera, et cetera. Though, we're now in the home stretch without much shenanigans. I'm now slowly chewing all of the things I bit off.

On the other hand, I found out about how certificates are generated in NixOS with the default workflow. It is using lego which has support for tons of DNS providers including Porkbun which is the domain registrar I used to buy my domain name. Nice! Now, there is more automation including taking care of setting the appropriate DNS records and now the shenanigans with certificates is on the past (or at least significantly reduced). All I have to take care of is my secrets file and managing my servers with some security which I created the following initial guidelines as a start.

- Starting with removing the keys from various KMS such as from Google Cloud Platform. This makes it easier to decrypt keys if someone has access to one of the users from its respective virtual machine instances since all of them are configured with their respective KMS enabled. So they have to go.

- Giving some more thought about managing secrets with their respective keys. Some examples include giving the least privileges with the minimal number of keys plus a fallback key for emergency. 3

Also, while taking care of that, I also found out about Porkbun API which means I could create a simple program to interact with my DNS records instead of going to the website. There are still some things to set up in the website but that's not much of a problem.

2022-12-04

Took a more serious approach to learning PostgreSQL this time because of primarily two reasons:

- Apparently, there are changes related to the

publicschema which is where most of the problems are from. That is indeed something to keep in mind as /foodogsquared/wiki/src/commit/bfe045c52b91ee45690208fc21d00bdcd4693075/notebook/2022-12-01 has already mentioned. - I only haphazardly glanced of the documentation before. Really, the approach is more scattered: just debug the errors from the services and go to the appropriate chapters. Which is fine for the most part but it can completely screw me over if I'm not mindful of the concepts.

With the listed information in mind, I deduced the problems are really coming from the applications to use the default schema which is in public (which is problematic for my configuration).

But I missed a very very crucial information that made me bash my head to this simple problem for hours over the previous days.

Apparently, most of them services can be configured to use another schema.

Yeah.

That's it.

What the hail…

However, there are consequences that made me understanding a bit how to properly manage a PostgreSQL database.

Specifically, what I've done after is trying to understand is how permissions and other objects are handled through the entire clusters. More specifically: schemas and roles which my understanding is proven to be lackluster.

With the new understanding, I came across a new way to manage them schemas.

The maintainers of PostgreSQL encourages a more secure usage pattern for managing schema referring to as secure schema usage pattern.

In the encouraged practice, database users have their own schema (with the similar name for their schema, of course) and encouraged to set the schema search path to be set only for the user themselves (e.g., search_path = "$user").

Then, with the applications lending a setting to configure the schema, you could make use of the appropriate schema for the appropriate user for that certain service.

I can see what the maintainers are encouraging here. With the different ways to authenticate (especially with the ident authentication and the like), each service which may be running with their corresponding operating system user has a corresponding database user that owns a corresponding schema for the service data and whatever objects they want to access. It's a nice, easy-to-understand practice that easily maps between the operating system and the database objects. With the secure schema usage pattern, you're essentially playing Match-3 for the sake of simplifying the system. Anything that reduces cognitive load for that is good enough for me.

On the other hand, the workflow for deployment NixOS-based systems is great with deploy-rs. Though, the only issue is I cannot deploy the config with user passwords. The terminal is usually mangled up while using it with the SSH'd process. Thankfully, one of the comment has shown a solution by disabling magic rollbacks which is unfortunate as I really would like to have those but it works. There is another comment that shows what's really happening under the hood which I can confirm with the part about how the input is echoed and fits with my experience of seemingly janky input with the mangled output. Another quick solution is configuring the SSH user to be passwordless with sudo which is not great to me. Don't like passwordless sudo especially that I've set a password for the user, making it essentially useless.

2022-12-05 - 2022-12-07

Nothing much happened here aside from sporadic debugging sessions. I took this opportunity to learn how to debug the system mainly with systemd and PostgreSQL components.

More specifically, some new things found:

- I found how great

systemctl showsubcommand is. You can view the properties of a unit which is nicer for debugging systemd services. Not to mention, you can specify the properties with the-Pflag (e.g.,-P User,-P Group). -

Some facts with networking ports. This is not something I managed over the days since Google Compute Engine firewall has an easy setup.

- Ports under 1024 are considered privileged (or system) ports and normal users cannot use them. Ports on 1024-49151 are registered (or user) ports and are maintained by IANA to assign and map ports to services. Ports on 49152-65535 are private ports and often ephemeral. 4

- There are conventional port numbers used for certain services especially in privileged ports.

- IANA does have a registry of port numbers for services with databases in different formats available for download.

2022-12-08

TL;DR - I've looked into managing emails with my system which is a responsibility for administering systems, yeah?

Anyways, I've been looking for an alternative email hosting provider that is not Google and found out about mailbox.org from Privacy Guides. They happened to have an annual Christmas campaign which nets me free usage of their standard plan for six months. Talk about timing.

What makes mailbox.org odd to me is the amount of services they provide and its pricing. For €3 (about ₱180), you have basically the equivalent of Google Workspace suite including 10 GB of space for email (which is huge for me excluding mailing list discussions) and 5 GB of cloud storage which is not much but it is nice. I would overlook mailbox.org's other services and only in it for the email provider buuuut I have found some neat things about their services. I first misjudged that it would be just some low-quality office suite services with the email service as the forerunner but it apparently uses Open-Xchange (OX) which means it can work with OX-compatible apps. Not to mention, their interface and infrastructure are built on it which the maintainers of the service are also involved with the development of the OX app suite. Aaaaand that opened up the world (or rabbithole) of office suites to me, out of all things.

Aside from the services, there are some neat things with the email service itself.

One such example is secure emails which is sent through an email address through secure.mailbox.org. 5

Sorry for misjudging mailbox.org as it turning out to be a solid provider the more I look into it. It turns out what mailbox.org provides is an office suite, basically an all-around alternative to Google Workspace. I can see myself being their customer for years though I'm mostly feel like it's a waste especially I'm looking only into email hosting. However, it is nice for a non-Google Workspace/Microsoft 365 setup if you want a more privacy-oriented workspace suite for your family or group.

At any rate, there are some services that are exclusively focused on email hosting such as…

- MXroute which has a nice option considering you have unlimited domains with unlimited email addresses. It costs $45 for a year which is cheaper compared to other services but it isn't for me (and I just missed the Black Friday sale, CRAP!). Comparing this option to my current needs and situation (only using one domain and using mailbox.org), it isn't the suitable choice considering the price is slightly close (without sales discount or anything).

- Purelymail is another service that is purely focused on email. As it motto says, "Cheap, no-nonsense email". It only costs $10 a year and already allows custom domain

One criteria I left out in the list is the spam filtering. Ideally, it should be done on the service provider side which is present on all listed alternatives so far. The question is how active and the quality of the spam filtering service. MXroute seems to be more on the active side and reportedly has more positive review especially with the way how emails are sent through their service. As for Purelymail, I don't see much things in that side.

At this point, MXroute is going to be my secondary choice for email hosting. For now, mailbox.org is enough for my needs. Both services required to be configured for sensible spam filtering anyways and I am currently under free credit anyways so I should have time to know about some details about email service in general.

There is also little trivia I've learned specifically with email aliases.

In mailbox.org, there are multiple email aliases that can be acquired for free (e.g., postmaster@ADDRESS, hostmaster@ADDRESS, webmaster@ADDRESS, abuse@ADDRESS).

This is apparently a convention for it, specifically RFC2142 that highly encourages certain aliases for different parts of the services provider.

Here's some examples from the document:

abuse@is for public inappropriate behavior.webmaster@is for handling HTTP but practically it's the account for handling issues in the website.postmaster@is for SMTP, typically used for mail services.

2022-12-10

The server is mostly operational but it required some non-declarative setup beforehand. Not exactly close to what I'm aiming for but it is close enough. Really, the problems mostly comes from the way PostgreSQL 15 handles the schema which I would like to take advantage not only with the latest improvements on the package but also its recommended practices. It seems like the current service NixOS module is not modelled after those practices. The practices I tried to apply are highly encouraged in version 15 especially with its changes. The NixOS module tries to cater for the majority of available versions.

On the other hand, I've configured my server to have backups for anything appropriate though most of the focus is on application data. Most of the services have a documentation for dumping data like in Vaultwarden, Gitea, and PostgreSQL. All I have to do at this point is to back them all up with Borg which I have a remote backup hosting at Borgbase.

It's seriously NICE to have the configuration coming together in a neat little package. Aaaaaaand the LDAP server is becoming more of an afterthought. Aaaaaaand speaking of afterthought, I'm considering to host a VPN service for my own connections.

On yet another hand, I've been considering about the VPS hosting provider, preparing to move away from Google Cloud Platform.

It is a shame as the platform is a nice tool.

I easily created an automated workflow that'll deploy my existing configurations in the cloud somewhere especially with gcloud tool.

I've considered Hetzner Cloud as the VPS host lately as the server options is ridiculously cheap.

About €5 for a complete server with a public external IP compared to about $30 in Google Cloud.

It also has a command-line utility humorously named hcloud which I should be able to easily automate it.

2022-12-12

The test Linux server is now mostly operational. It is nigh time for managing a Windows server and make them communicate with each other, probably with services with Active Directory which is apparently an LDAP server with Windows-specific bells and whistles.

Today, I've also learned about the existence of systemd-tmpfiles for customizing Gitea from my NixOS configuration.

However, there are some things left to do in this production server in development. Most of them are involved with authentication services.

- For one, I would surely create an LDAP server just for the kicks, specficially kick up an OpenLDAP server.

- I've decided to add one more authentication service, mainly for the web with single sign-on and social logins. This is apparently a separate service to an LDAP directory service. For this, I decided to host with Keycloak.

- Add the appropriate settings for the already existing services. Since the additional services listed here require more caution for using it, we may as well make it for the rest of the system. This includes adding secure TCP/IP connections with SSL for my PostreSQL service.

Aside from these authentication services, I reviewed my understanding of CA certificates in relation to HTTP(S). As HTTP is stateless, it isn't concerned who is communicating between each endpoint. However with HTTPS, it is a different story.

HTTPS is essentially HTTP + SSL/TLS. 6 From what I can understand in TLS, the certificates are composed of a keypair: one issued as a public key for clients to communicate with the server and the private key to verify them so the server can communicate with the client securely.

In order for applications to make use of this, you can either configure them to point the certificate files. This is commonly used for web servers (e.g., Nginx, Apache, Caddy). There are also other applications that make use of this such as databases (e.g., PostgreSQL, MySQL), Kubernetes, and authorization services (e.g., Keycloak).

2022-12-15

Today's theme for management is: secrets management. While this is already done for my NixOS setup which is done with sops, keeping those secret keys is now a matter of securely keeping it.

To solve this problem, we have to lay out all of the information of our current situation:

- There are private keys for different formats: GPG, SSH, and age. Not to mention, remote secrets such as from GCP KMS, Azure Vault, Hashicorp Vault, and AWS KMS.

- Proper storage for these keys. This is especially important for GPG where it revolves around your identity. As I don't have an iota how to do it right, I followed someone's guide for this instead. More specifically, I followed the recommended resource from that post which is from the subkeys management page from the Debian Wiki.

- Multiple keys management. I want to properly learn how to manage them keys for different purposes.

- Backing up properly which is already done with borg. Hoorah for me…

2022-12-18

Now, the start of properly setting up them authorization services. More specifically: Keycloak and OpenLDAP.

- For OpenLDAP, the project has a nice documentation for configuring and administrating an LDAP server. I practically have no choice since other alternatives such as FreeIPA and Authelia is not exactly present as part of the available NixOS modules.

- For Keycloak, the documentation has a nice structure to it including references and "Getting started" guides.

Though, all of the time are spent on learning OpenLDAP instead. Looking at the examples, it is quite verbose. It is becoming similar to the Google Cloud Platform documentation problem where it is becoming overwhelming and requires through pages back and forth. Don't get me wrong, it is nicely structured but it is verbose. I think I need some more time to absorb this. Even if I skimmed it, I cannot get a bigger picture clearly.

Looking at the whole picture, it is pretty simple as to what it is. Most of the details are hidden behind conventions which is where my problems lie. It really requires familiarity which is acquired with time. Not to mention there's not much examples I can see in the whole wide web so I cannot freely experiment some things. (Thank good God for NixOS enabling easy experiment with building VMs easily though.)

I'll put up with studying about Keycloak later. While I can see myself using Keycloak more, LDAP seems to be more common judging from the job listings which is why I'm studying this in the first place. Active Directory being one of the most prominent example for this. Speaking of which, I should really get with managing a Windows server at some point. Preferably, they would have to be done in early January as I'm not done configuring the (NixOS) Linux server yet.

2023-01-01

Took some holiday vacation. Back to normal scheduling.

I've been deciding on migrating host to Hetzner Cloud since it is way cheaper compared to Google Cloud Platform. The disadvantage is there's no way to make into provisioning with a custom ISO except with a kexec-based image. However, you can easily initialize a NixOS system with nixos-infect, a script that converts a traditional Linux system into NixOS.

That's pretty much it for the most part. Most of the things done is only for migrating and getting familiar with Hetzner Cloud. It is pretty light on the thing but most of the system are not working due to ACME. I'll just wait for some time and tinker with it some more.

2023-01-04

I'm now trying to get a concrete step into showing some credentials by completing certain courses in Coursera. It is mostly for certificates just to show that I have self-drive. Fortunately, Coursera has a financial aid initiative which is quite easy to get approved of but it does take some time.

For now, I've been completing a part of the Google IT Support Professional Certificate, mostly in the security side as it is the weakest side that I know. I'm also taking the Cloud-Native Development with OpenShift and Kubernetes specialization also for the certificate but learning more about Red Hat systems seems to be interesting. Fun and beneficial: double the benefits!

For now, the self-managed server has been stopped since I'm just waiting for the certificate cooldown. The server should be backed up a couple days from now.

2023-01-06

The server is back up and running!

I also added an Atuin sync server especially that I use more often now and slowly needing to sync the history. While the sync server from the developer is nice, I decided at the end that I would like to manage it for a try. Fortunately, the service is already available as a NixOS module so there's not much problem there.

Next up, I also replaced the OpenLDAP server to be managed with Portunus which is already available as a NixOS module. I haven't tried completely managing it yet, though. I only scratched the very surface of managing and using LDAP. I should find out more about it once I continued with my courses I previously mentioned in this journal.

There is another thing which is adding a new component in my server: a nice storage box. Specifically, I added a storage box from Hetzner which is surprisingly cheap with €4 for a terabyte. What's more, this storage box has some neat features including an SSH and Borg server. This instantly replaces my decision to go with a Hetzner storage box instead of Borgbase which is nice but with the budget in mind, I get more mileage from Hetzner's offer.

A complete self-managed setup for €8 a month? That is very nice especially with a budget. I'm very satisfied with Hetzner so far.

Even with a meager job pay, my whole setup is quite cheap. Here's a table of the expenses.

| Thing | Expenses (in EUR per month) |

|---|---|

| Hetzner VPS | 5.35 |

| Hetzner storage box | 3.5 |

| mailbox.org account | 3 |

| foodogsquared.one domain | 2 |

| Total | 13.85 |

2023-01-11

Looked into properly configuring fail2ban which apparently does not do much by default.

The documentation of the project is a bit scattered throughout its website, wiki, and the source code. Most of the knowledge I picked up came from the already existing configurations from upstream with the manual as the accompanying starting point all to make things connect.

Another thing that is neat is it can handle systemd journals apparently. This make it easier for me as I would like to keep my services managed with systemd.

At this time, this is where I learned about matching with journal fields which journalctl is primarily used for.

Even the -u UNIT option that I always use is just generating certain journal fields under the hood.

My usage with journalctl is pretty basic as seen from Command line: journalctl.

I only done like the basic matching of a unit, some basic journal management (e.g., log rotation, pruning), and monitoring them services.

Overall, diving into configuring fail2ban is not exactly a great experience. Reminds me of the situation for the Nix ecosystem: it is a great tool hindered by its sorry state of documentation. Except this time, it's somewhat worse with the outdated manual and the scattered state of picking up the pieces together. I don't know enough to make more insightful comments but this is coming from my experience as an outsider trying to dive into using it. Delving into Nix has prepared me for this type of situation and I'm not liking it.

At the end of the day, fail2ban is a great tool just hindered by its documentation. The lack of good user documentation just means you'll have a harder time getting to know things if starting out and wanting to know more beyond the basic things.

To quickly get up to speed with fail2ban, I recommend starting out with the previously linked manual and see the upstream config files for examples which is commented at some parts. Some of the comments from the upstream config files are even repeated with basic documentation as if someone is expecting an admin to just jump in to see how things work behind-the-scenes which I think it is what is intended.

2023-01-12

Just a quick update on fail2ban regarding its state of documentation: apparently it has manual pages which I completely missed because the package from nixpkgs doesn't have them. I added it to the package definition and created a pull request for it.

Now, the manual pages are the user documentation that I'm looking for.

It's actually very nice complete with details starting with jail.conf.5 being a go-to reference and its manual pages for executables (e.g., fail2ban-regex.1, fail2ban.1) is nice and brief.

Sure, it's scattered but that's just the state of being a Unix manual page.

I'm retracting my statements which I unfairly described the state of documentation as a poor one.

Instead, I'm replacing it with it's pretty good and the opinion of Nix is a great tool with poor documentation is still unchanged. :)

On the other hand, I started configuring with Portunus as an LDAP server. I really want to make this work despite having not much use out of it just for the sake of learning other ways how to authenticate outside of the web logins. One of the handy features with Portunus is applying a seed file which essentially declares the groups and users for that LDAP server.

I've also started to modularize my Plover NixOS config since it is getting started to become really big. An embarassing side story with this is that I once accidentally deleted the modularized Nix files and I have to rewrite them, not realizing that Neovim still have those files as a buffer stored in-memory. So there's one thing that is hopefully helpful to remember next time you find yourself in that kind of situation. Most text editors can do this including Visual Studio Code and Emacs so you can just restore them back by saving them. Though, if you close those buffers in the event of deletion, it's deleted for good.

2023-01-13

Continued my progress of the self-studying for the Coursera certificates. Despite seemingly only doing it for the certificates (which you could describe it like that and I'm not denying any of it), the courses I've chosen is pretty interesting. Especially with the IT fundamentals specialization from Google. I mostly proceeded to the part where they discuss about cryptography and its applications.

There is an interesting thing going on especially that it is leading me to several stuff such as the practical uses of a VPN. I always thought a VPN is mainly used to anonymously browse the internet but it turns out, it isn't the case. A VPN service can be used for several things.

- Getting around geo-restricted content.

- Safely accessing sensitive services from a different network (e.g., your VPS's, your own home network) from an outside source.

- Piracy.

You can still use a self-managed VPN in a VPS as a privacy tool but that will depend on your trust towards the hosting provider and your care towards privacy (if at all). However, you're pretty much limited with the configuration of the system such as the location which is most likely only hosted on one location which is pretty much the main incentive for using a VPN service provider such as Mullvad and NordVPN. Not to mention that you share the self-managed VPN service with the VPS provider. It is a matter of judgement whether you tolerate that.

So far, I've only considered self-managing a VPN service in my VPS instance which is most likely happening anyways. For now, I'm just scouting for resources for configuring OpenVPN which is already available as a NixOS module. I have a feeling I'll have some difficulty facing in the next day. Especially with networking parts of the system configuration.

2023-01-14

My feeling of dread from yesterday is right on the mark. (I guess it's not exactly surprising that it will happen considering I have practically absolute zero experience and knowledge regarding networking.)

I started from the bottom-up knowing a little bit on networking. More specifically, I'm trying to configure my networking setup on my server starting with supporting IPv6 which is already a thing in Hetzner Cloud. I just have to figure out how to do that with my system tinkering with these network-related thingamajigs. Thankfully, Hetzner does have a dedicated page on the basics of IPv4 and IPv6 which is very helpful in making me understand the basics.

Most of the resources for configuring network-related settings for Hetzner Cloud servers are aimed for the traditional Linux distros. Searching through the NixOS Discourse instance, however, netted some discussions on that exact topic which is convenient. More specifically, this post which describes setting the network with systemd-network made me learn about setting up networks with systemd directly.

I've learnt more about IPs with the following resources.

- As aforementioned, Hetzner has a dedicated page on the basics of IPv4 and IPv6.

- Wikipedia has a page of reserved IP addresses which is handy.

- IETF RFC4864 where it showcases some features of IPv6 alongside its use cases.

- IETF RCF1918 where it describes allocating IPv4 private addresses.

- Cisco has a document on the overview of IPv6.

2023-01-15

More studying about networking. Specifically, trying to configure my networking setup with systemd-networkd replacing the traditional script-based networking that is done in NixOS. I've been tempted to learn it seeing as it has some nice features compared to the traditional networking done by NixOS.

The first try is a disaster because I didn't know I misconfigured the routes for the network.

More specifically, I misunderstood the gateway address is the private IP address connected to my Hetzner server, not realizing the gateway address is in private address 172.16.0.1.

This resulted in the following error logs.

Jan 15 05:47:19 nixos systemd-networkd[18933]: ens3: Could not set route: Nexthop has invalid gateway. Invalid argument

Jan 15 05:47:19 nixos systemd-networkd[18933]: ens3: FailedI'm also trying to start an OpenVPN server for the local network at the deployed server. This is also for practical purposes, hiding some of the more sensitive services such as my Keycloak instance and LDAP server. 7 Apparently, it doesn't have username/password authentication and has to be implemented by installing a module. I'll try that route but I'm also very tempted to try using LDAP for it considering it also has support for it and a valid excuse now for using it.

The OpenVPN documentation is very nice with a directory of community resources of which has things like a a HOW-TO document, a reference manual, a document for hardening OpenVPN security, and a tutorial for ethernet bridging. Not to mention, OpenVPN has a community wiki containing lots of explanation for concepts. Most importantly, it has a set of offline documents which should be included with the package when installing them. I recommend to start with the offline documents especially if you're unsure where to start with the online documents.

The only thing I fear with a VPN service is the amount of bandwidth it will send out but seeing as Hetzner Cloud has 20TB worth of outgoing traffic for free which is more than enough (an understatement), I don't know it will be a problem. With that said, I haven't fully configured OpenVPN yet as I'm just exploring the documentation and creating a basic configuration out of it. It's not yet complete with configuring profiles for the client and server but it is getting there.

2023-01-16

I've been recommended to look into Wireguard which is supposed to be more performant than OpenVPN and it is baked into the Linux kernel. Aaaaaand it is easier to use. Not to mention, it is also supported by systemd. Looking into the ecosystem, I see that Wireguard has an Android app which is very nice to my checklist. However for the time being, I'm continuing with OpenVPN especially that it has more authentication options whereas with Wireguard being certificate-based.

From what I can see, Wireguard is simpler and faster.

In my understanding, Wireguard is indeed simpler in the way of configuring.

In Wireguard, there is no server and it instead interfaces with peers where each peer in the network is configured with each other's public key.

From the initial reading, I was able to easily configure Wireguard.

However, I'm more interested in configuring it with systemd-networkd which did took some time since I'm still grokking the service.

In the short run, I was able to figure out to configure a peer but eventually found out I also have to configure it to my desktop.

For now, I'll leave the progress here as I'm continuing to configure OpenVPN just for the time being.

A VPN is an interesting (and a must-have at this point) component to add to my server for not only learning networking concepts but also practical reasons. Very nice. I just have to figure out how to configure it like in the following plan.

As for configuring OpenVPN, I've not yet to run a server since I'm still figuring out network devices in general.

The concepts are still bouncing in my head as I'm trying to make sense where things to be put together.

systemd-networkd, while interesting, is a bit overwhelming with the concepts.

For this, I'll leave a short list of guidelines how to get started with it.

- Start with the

systemd-networkd.service(8)manual page. It is the root of the concepts not to mention it quickly introduces to the related components of the service. - Its manual pages to its related configuration files (i.e.,

systemd.link(5),systemd.netdev(5),systemd.network(5)) are all must-haves to read. Especially that it contains a comprehensive list of examples showcasing different setups on the later parts of the manual page. networkctlis going to be your best friend for managing networking setup with system. Don't forget to refer tonetworkctl(1)manual page for more details.

I think I bit more than I chew, juggling between configuring the networking setup to my setup, OpenVPN, and Wireguard. It is an interesting experience as I'm trying to map things together just from reading its documentation. Though, I feel it is somewhat wasted effort as most of the time, I'm only trying to map things together while being overwhelmed. Not exactly a good practice.

I'm slowly gaining confidence with my networking concepts. At some point, I'm going to review them with a related course from Coursera for it.

Next up, I think I will configure systemd-resolved as I'm having a little trouble with understanding related parts of network configuration such as DHCP and DNSSEC.

What are all these, man?!

2023-01-17

I am familiar enough with IP addresses so I'm moving on to understanding DNS. The primary reason: systemd has a resolver service so I'm interested on interacting with it.

But first, I have to understand what DNS even is. Fortunately for me, there is a comic series that explains that very same thing I'm trying to understand. 8 I highly recommend it.

At this point, I'm juggling between configuring Wireguard for the network and preparing to configure systemd-resolved at a later time (or at least trying to understand it). I'm also adding properly configuring a software firewall like nftables although it is blocked from being problematic in the associated NixOS module. It doesn't seem to properly generate a configuration without errors. Previously, I've used iptables for a short while until it was apparently deprecated so I hold it off. Though, it also had problems with the resulting firewall making the network reject all incoming connections.

So, for this firewall problem, I tried the simplest solution: trying with the most sensible and minimal configuration. I tried the following nftables-based firewall configuration with following snippet on my desktop:

{

networking = {

nftables.enable = true;

firewall = {

enable = true;

allowedUDPPorts = [ wireguardPort ];

};

};

}This works on the desktop workstation host so far. It does serve as a nice starting point for learning about nftables. There are other resources I recommend:

- Start with the

nft.8manual page. It is the canonical user reference with nice introductions to the related concepts, syntax, and options. - For potentially looking out to other documents, their community wiki has a nice list of nftables-related documents.

For now, I haven't created a nftables script yet. Looking at the documents, it should be take an afternoon to learn just enough to be dangerous but make stupid decisions.

So far, my experience with software firewalls are not great but that won't deter me from it. I want to have an operating system with such features especially integrating with tools like fail2ban where it can use the firewall to completely ban the host.

2023-01-18

Welp, today's theme is unfortunate server update timing. Let's start with the end state of the server for the unfortunate time: its network became unreachable from the outside.

This story starts with an impatient person as they try to upgrade repeatedly without success similarly encountering problems as described from this issue. I cannot exactly reproduce this bug as I don't have enough understanding how deploy-rs really works but I mostly think this is a server issue. To be more specific, what really happened is I cannot successfully deploy the updates as they always end with a timeout for whatever reason. As described from the linked, this is specifically tied to the magic rollback feature as seen from the following logs from a deploy attempt:

⭐ ℹ️ [activate] [INFO] Magic rollback is enabled, setting up confirmation hook...

👀 ℹ️ [wait] [INFO] Found canary file, done waiting!

🚀 ℹ️ [deploy] [INFO] Success activating, attempting to confirm activation

⭐ ℹ️ [activate] [INFO] Waiting for confirmation event...Anyways, as this impatient person grew tired, they decided to go with the updates but without the rollback feature. It's a fatal mistake. This is pretty much where I feel NixOS configuration rollback capabilities would be very useful.

The temporary outage is caused by improper routing configuration as I haphazardly copy-pasted the configuration from the internet without taking a closer look. The following code listing is the erroneous part of the configuration.

{

systemd.network.networks."20-wan" = {

routes = [

# Configuring the route with the gateway addresses for this network.

{ routeConfig.Gateway = "fe80::1"; }

{ routeConfig.Destination = privateNetworkGatewayIP; }

{ routeConfig = { Gateway = privateNetworkGatewayIP; GatewayOnLink = true; }; }

# Private addresses.

{ routeConfig = { Destination = "172.16.0.0/12"; Type = "unreachable"; }; }

{ routeConfig = { Destination = "192.168.0.0/16"; Type = "unreachable"; }; }

{ routeConfig = { Destination = "10.0.0.0/8"; Type = "unreachable"; }; }

{ routeConfig = { Destination = "fc00::/7"; Type = "unreachable"; }; }

];

};

}This pretty much makes it unreachable from the outside. Thankfully, it is successfully configured to reach global networks from the inside. While access through SSH is no longer possible, Hetzner's cloud console saves the day. It works by booting the server as if you're physically there so it can still be recovered.

2023-01-19

Welp, decided to take a different approach to journalling. Here's what I want to do and whether or not I completed it on time.

- Configure systemd-networkd to properly configure network devices with automatic IPv6.

- Learn nftables for IP forwarding and additional Wireguard setup

I was not able to learn about nftables at time. Most of the time, I've been trying to parse what is going on exactly with IP addresses and how to setup a networking setup with a system. I found myself overwhelmed with the concepts of dynamically generating IPs which apparently has multiple ways to generate them which is especially true with IPv6 which is already something that I'm barely familiar with.

For a short recap, similarly to IPv4, IPv6 have assigned address ranges for private networks. These interfaces are not going to generate and assign IP themselves, you still have to assign it. However, you're dealing with IPv6. Manually assigning IPv6 IPs is not often worth especially with subnetting. And so there are ways to generate them…

- Stateless Address Autoconfiguration (SLAAC) generates IP addresses by its nearby network links that are sending "Router Advertisement". No additional servers and manual configuration of hosts necessary. One neat thing with this is the process is decentralized, the minimal amount of changes to make, and only requiring local information. I recommend to stick to this.

- Good ol' DHCP server except for IPv6, referred to as DHCPv6. Simiarly, a request will be sent to the server and receives an address which can be used to automatically assign to a network link. While this can be nice for restricting what addresses can be published for that zone, it is centralized. Once the server has shut down, the network will likely fall as the IPs the links hold are dynamically configured.

One more thing: apparently, you can combine static and dynamically configured IP addresses. I always thought it is only given a choice of static and dynamic IP configuration considering most interfaces I've interacted presents it this way (or at least my impressions of it). It makes sense once you know that multiple addresses can be assigned to an interface and dynamically generated IPs have virtually no difference to distinguish themselves from statically assigned IPs. It's just IPs on the way down.

I also discovered a valuable tool which I should've thought of at the beginning of my struggle understanding IPs: an IP calculator. More specifically, ipcalc because it is in the same environment you'll be configuring them networking setups anyway.

nix run nixpkgs#ipcalc -- 2001:5eca:de53::3Full Address: 2001:5eca:de53:0000:0000:0000:0000:0003 Address: 2001:5eca:de53::3 Address space: Global Unicast

2023-01-21

Stumbling into IP problems. Again.

This time, it's about application services.

It challenges my understanding of the relations of it with applications because I didn't know they can be hosted in a different interface other than localhost.

Now, those host options or what have you makes sense.

The solution is to simply reconfigure them to go to a different host interface.

The most tedious part is manually assigning and remembering them so I put the interface hosts in a set and just refer to that instead.

With this in mind, I fell into a mini-rabbithole for networking-related things. Most notably, I was looking for a way to automatically assign IPs to applications if possible.

The closest thing I have seen so far is network namespacing which is a thing in a Linux kernel. Aside from isolation and controlled sharing, network namespaces allows you to assign prefixes to interfaces. This seems to be fitted for my use case for a way to assign IPs to different services without manually assigning them. I checked to see if systemd can do this which apparently isn't. However, there is an interest for it and while there is an impending implementation for it, it seems to be dormant which is unfortunate considering fellow systemd contributors also expressed interest for this feature to manifest.

Another point of interest I was in is "properly" deploying a Keycloak instance. I haven't managed it since I was supposed to after I configured the VPN or whatever tunneling service I want to manage. This is where I found an alternative to Keycloak named Zitadel. It seems nice considering it can be self-hosted and deployed from a single binary. As of this entry, there is no package, module, or even just a mention from the nixpkgs repository. Seems like a nice time to try out packaging and creating a module out of it. But right now, I have no interest in fully self-managing it considering Keycloak is a popular option.

While I found an alternative for Keycloak, I also found a complement for Keycloak (or at least the type of service that Keycloak offers) called privacyIDEA which focuses on 2-factor authentication. While Keycloak supports 2-factor authentication, it is only through TOTP/HOTP. privacyIDEA supports more than that through its ecosystem of modules. Not to mention, privacyIDEA has a Keycloak provider, making it nicer to integrate between the two. I may consider to add it in my half-full plate of self-managed services.

I'm also reconsidering to deploy back to a bare OpenLDAP server but it may be just me. Portunus is pretty great so far but I didn't properly made (nor even connect to) a LDAP profile yet. I need to properly configure Portunus especially that it has options to stay only in the private network. It's just not an option with its NixOS module which should be trivial to add. I'll have to keep in mind with a PR in the future once I properly deployed the previously mentioned services.

Where quality may vary but if you have no idea nor connections to start with, it's a good indicator as long as there's other more credible sources.

It's a basic fact, yes, but I haven't paid attention to these details yet.

Which I don't know if that's even safer but as long as the emergency is exclusively used for its purpose, I think it's safe.

They're also called dynamic and/or private ports.

Not supported for all providers, though.

An important detail is SSL is a predecessor of TLS and TLS is used as a replacement nowadays but most documents still refer to it. In other words, they are interchangeable.

Though, I'm not sure whether some services are appropriate for it to be hidden behind.

Just found it in the first page which is nice for me. Though, it is at the bottom on Google results while in the middle for Brave. I'm guessing because most Brave users are already tech-savvy thus tend to get higher quality when it comes to these types of topics.